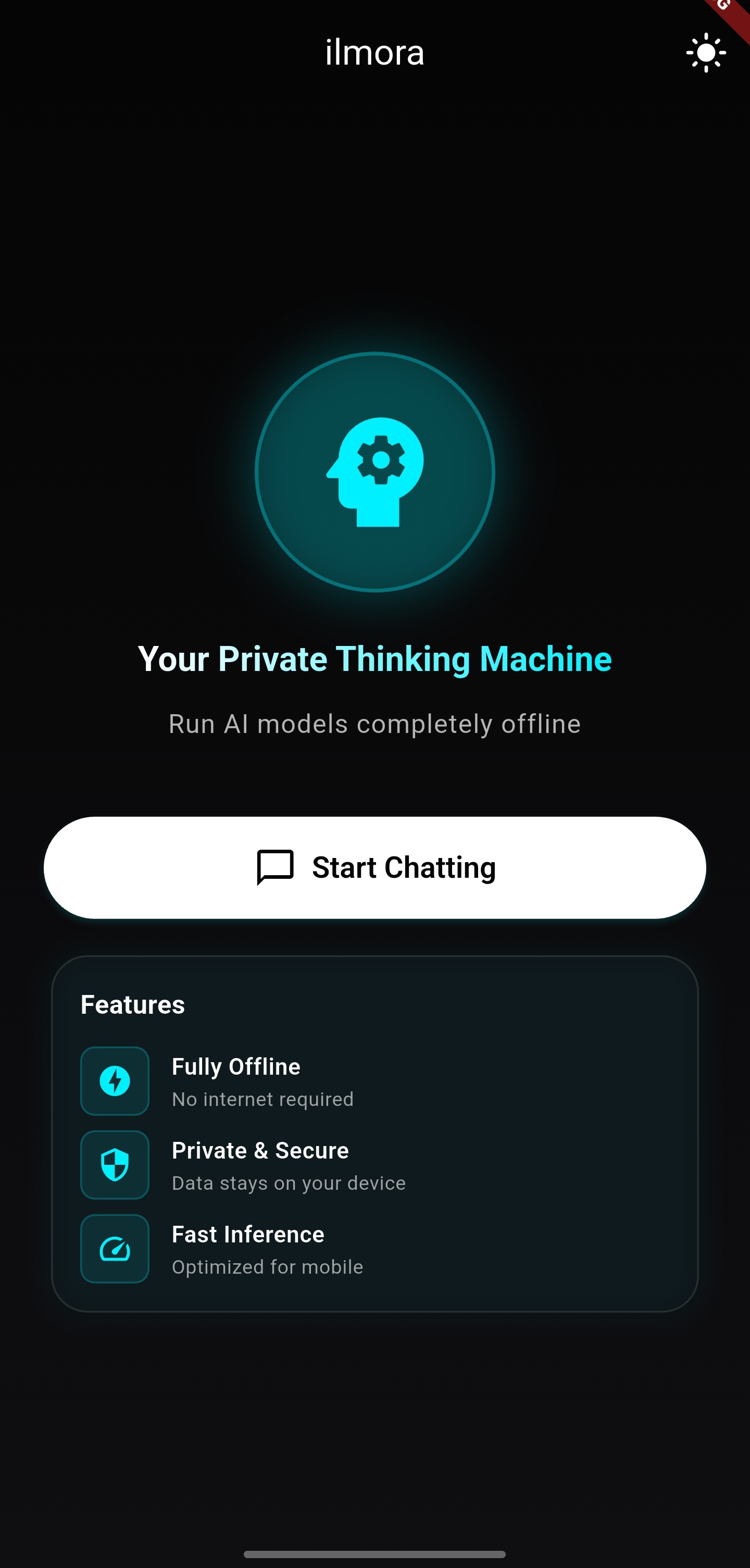

Your Private

Thinking Machine

Run powerful Large Language Models entirely on your Android device. Zero server costs. Complete privacy. No internet required.

0%

Data Tracking

Offline

Architecture

~15 t/s

Inference Speed*

GGUF

Universal Format

*Measured on Snapdragon 8 Gen 2 (1B params, Q4_K_M)

Core Capabilities

Intelligence at the Edge

Total Privacy

100% on-device inference. No API keys, no telemetry, no network calls. Your thoughts stay on your phone.

Persistent Memory

ilmora remembers. Facts and conversations are stored locally using smart summarization, surviving app restarts.

Coherence Engine

Automatic retry logic with temperature decay ensures responses are always coherent, preventing loops and gibberish.

Quality Insights

Visual quality badges for quantization levels. Know exactly how your model will perform before you load it.

Session Management

Real-time search, export/import to JSON, and custom system prompts for different personas.

Auto-Templating

Automatic chat template detection for LLaMA, Mistral, Qwen, Phi, Gemma, and Alpaca.

Ready to sever the cord?

Experience the freedom of local AI. Built with Flutter, powered by llama.cpp.

Download for Android